Xun Huang (/shuun hwang/)

Email | Google Scholar | Twitter/X | GitHub | Publications | Teaching | Blog

Email | Google Scholar | Twitter/X | GitHub | Publications | Teaching | Blog

I was previously a Research Scientist at Adobe Research, an Adjunct Professor at CMU, and a Research Scientist at NVIDIA. I obtained my PhD in Computer Science from Cornell in 2020, advised by Professor Serge Belongie.

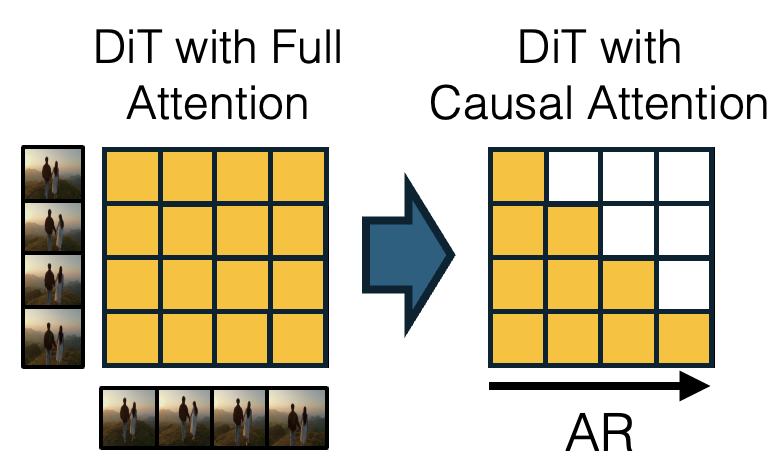

I invented architectures and algorithms that have enabled autoregressive real-time video generation, including Self Forcing and Autoregressive Diffusion Transformers (CausVid). Previously, I developed one of the first public text-to-image demo (GauGAN2), as well as NVIDIA's first text-to-image and text-to-3D foundation models. My research has been cited over 17,000 times as of Dec 2025.

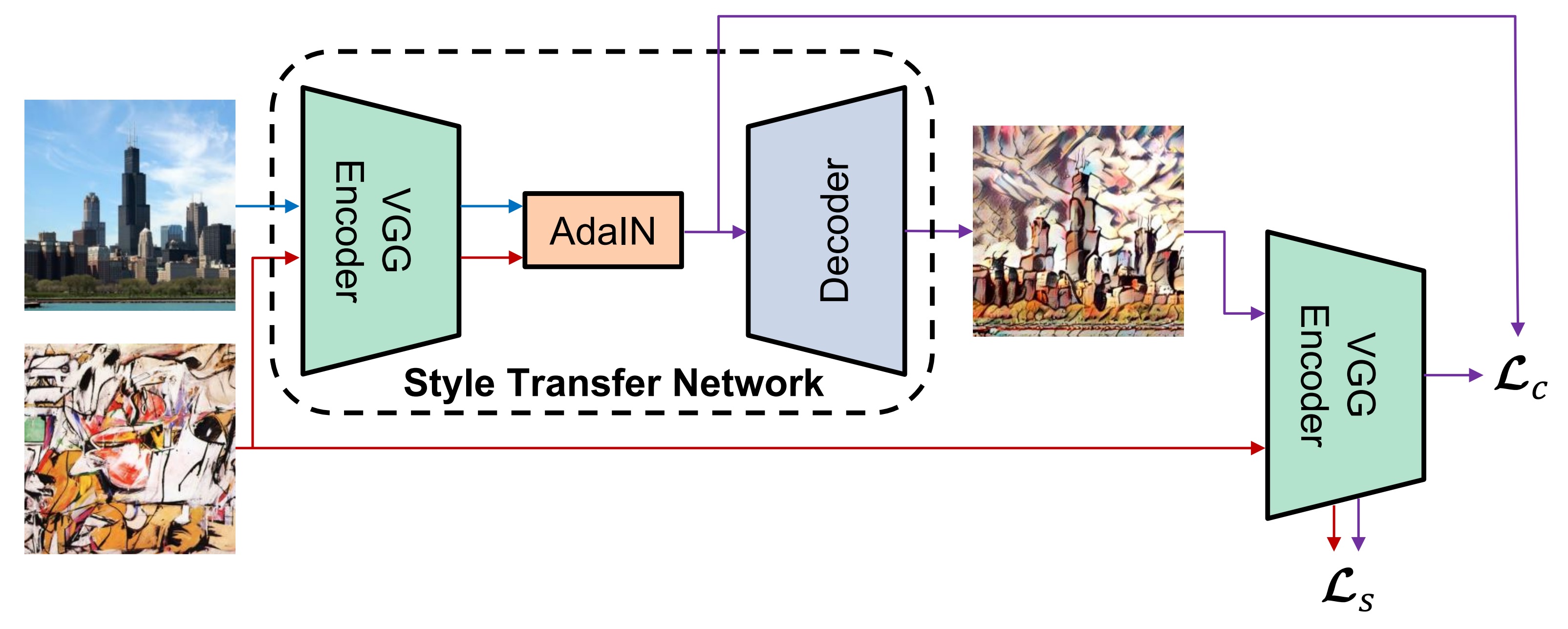

I have been working on multimodal "Generative AI" for 10 years. During my PhD, I invented Adaptive Instance Normalization (AdaIN) and was the first to demonstrate its effectiveness in generative neural networks. AdaIN became a foundational component of StyleGAN and played a key role in the first working diffusion model. Variants of AdaIN are now used in nearly all diffusion models. My PhD research was supported by Adobe Research Fellowship (2019), Snap Research Fellowship (2019), and NVIDIA Graduate Fellowship (2018).

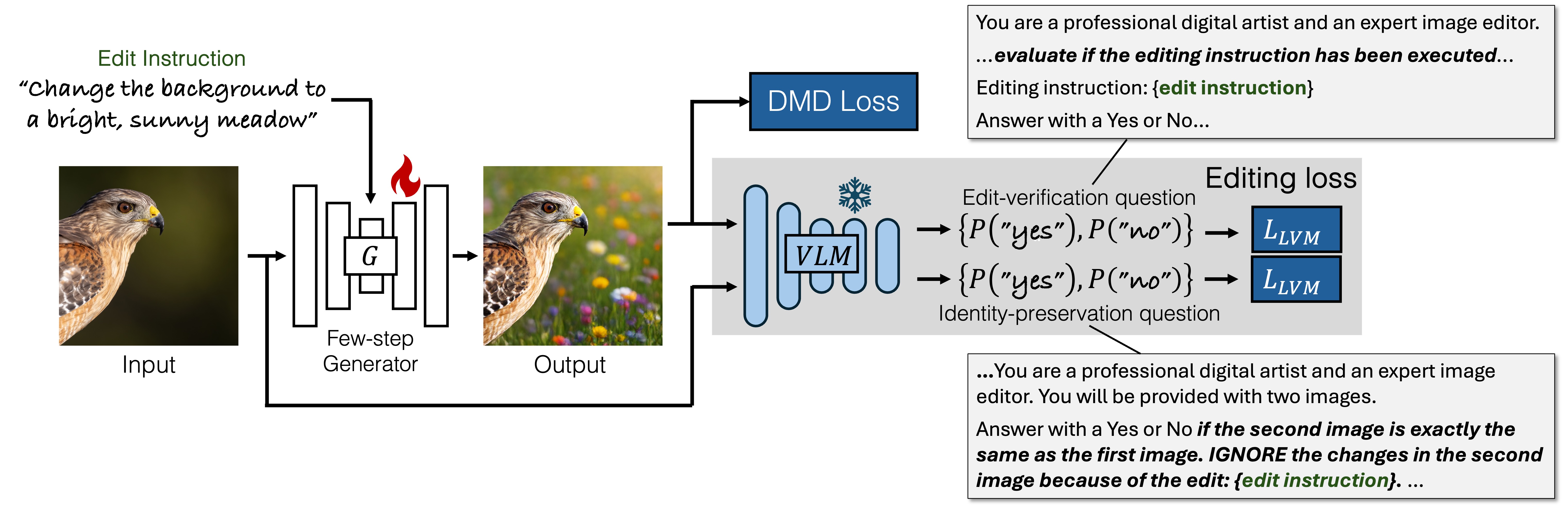

ICCV 2025 (Best Paper @ CVPR 2025 T4V Workshop)

Sicheng Mo, Thao Nguyen, Xun Huang, Siddharth Srinivasan Iyer, Yijun Li, Yuchen Liu, Abhishek Tandon, Eli Shechtman, Krishna Kumar Singh, Yong Jae Lee, Bolei Zhou, Yuheng Li

[arXiv] [Project]

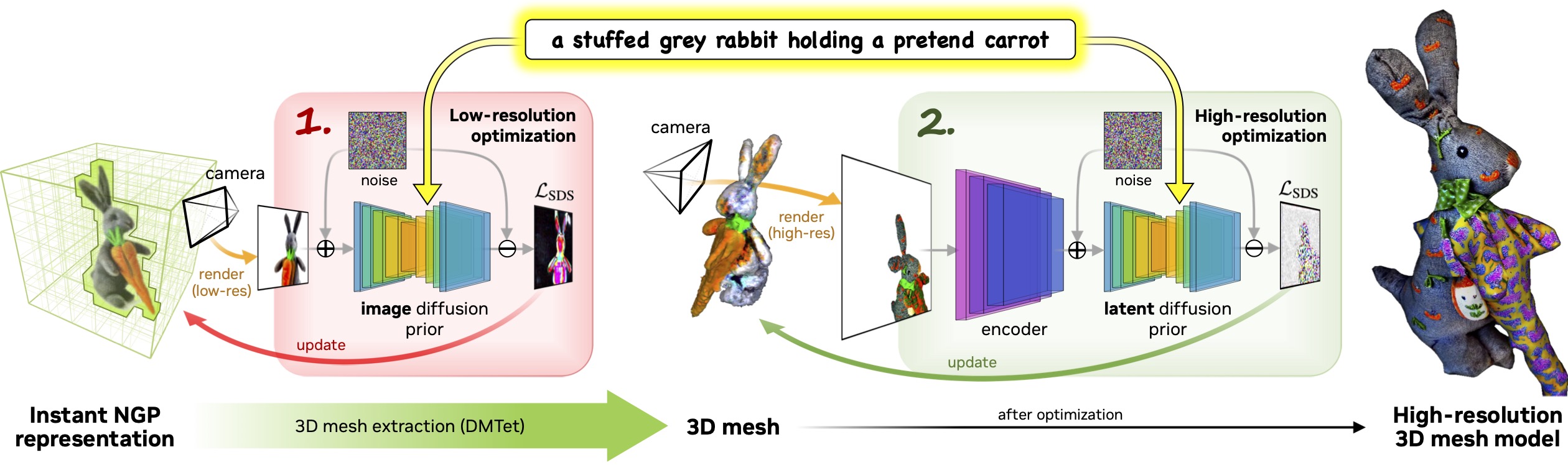

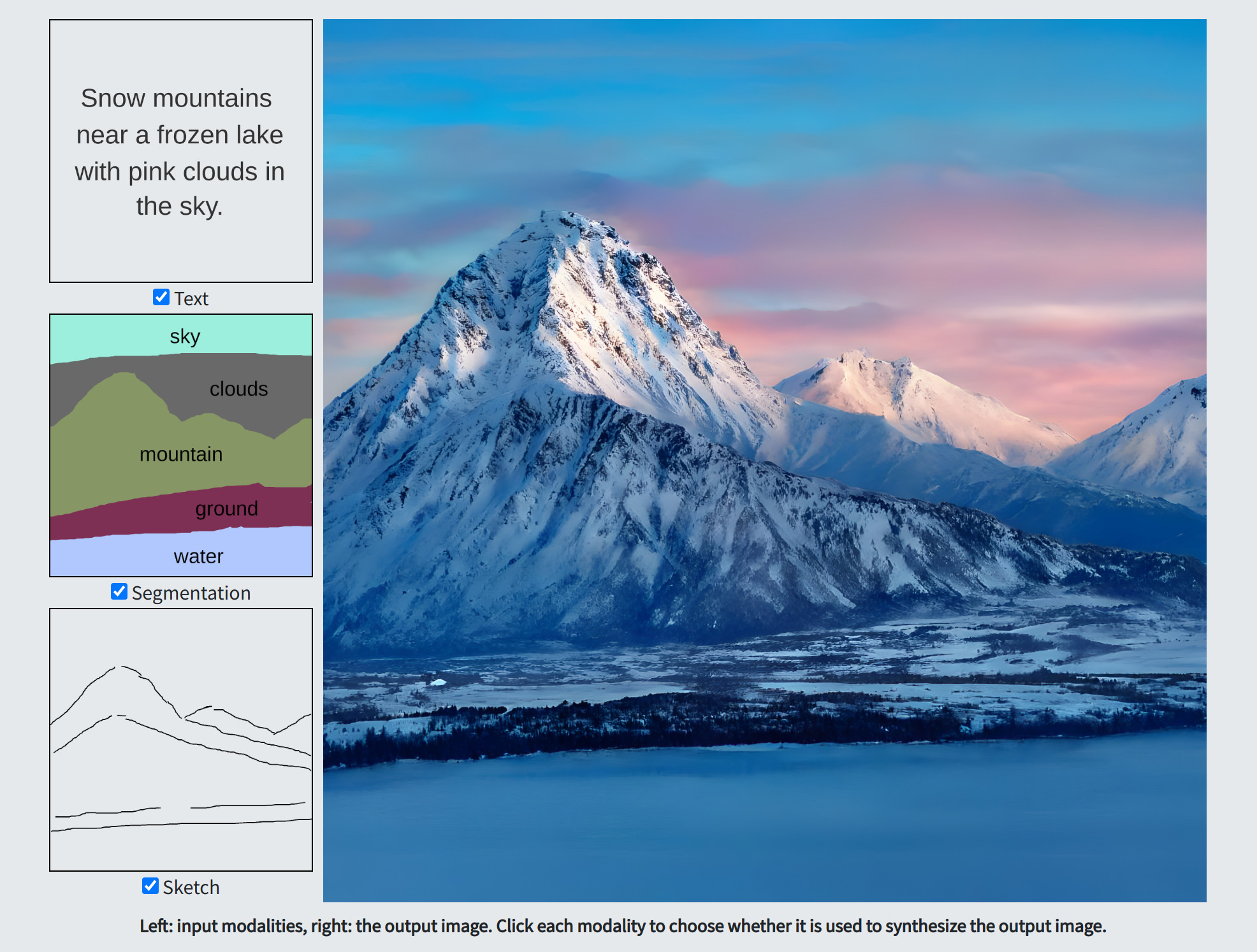

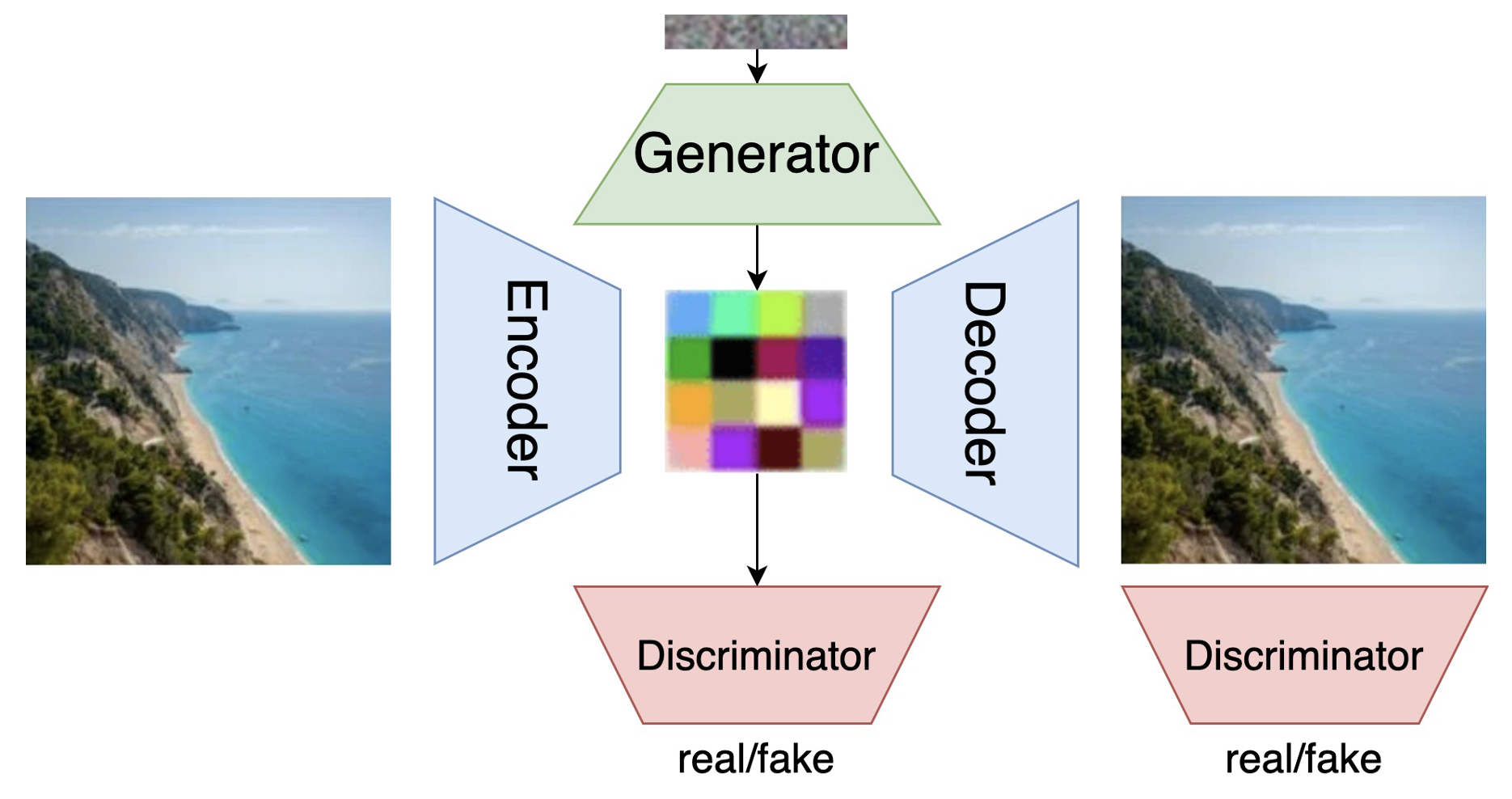

ECCV 2022

Xun Huang, Arun Mallya, Ting-Chun Wang, Ming-Yu Liu

[arXiv] [Project] [Video] [Two Minute Papers]

I have been fortunate to work with many talented students and interns: